Frameworks, core principles and top case studies for SaaS pricing, learnt and refined over 28+ years of SaaS-monetization experience.

Thank you! Your submission has been received!

Oops! Something went wrong while submitting the form.

AI Pricing

Generative AI has taken the tech world by storm, powering everything from chatbots to coding assistants. But behind the wow factor lies a sobering reality for businesses: AI is expensive to serve. Unlike traditional software, which can be replicated at negligible cost, each AI interaction consumes significant computational resources.

These high cost-to-serve dynamics are creating a new kind of margin pressure that software companies haven’t faced before. This article explores the true cost of AI in 2025, why serving up “intelligence” isn’t cheap, and what it means for pricing strategies and profitability. We’ll look at how current large language model (LLM) infrastructure costs are squeezing gross margins, compare generative AI product economics to traditional SaaS, examine real-world examples (ChatGPT, Anthropic, GitHub Copilot, etc.), dive into Bill Gurley’s “negative gross margin” theory, and discuss implications for SaaS pricing leaders.

(Let’s look at the operational realities behind monetizing AI.)

Why is AI so expensive to serve? The answer lies in the computer-hungry nature of modern AI models. Large Language Models like GPT-4 consist of billions of parameters and require clusters of high-end GPUs (or specialized AI chips) to run inference (generate outputs) for each user query. This is very different from traditional software, where delivering service to one more user has almost zero marginal cost.

Every time someone asks ChatGPT a question or GitHub Copilot to suggest code, significant computing power is being harnessed on the backend, and someone is paying for those electricity and hardware cycles.

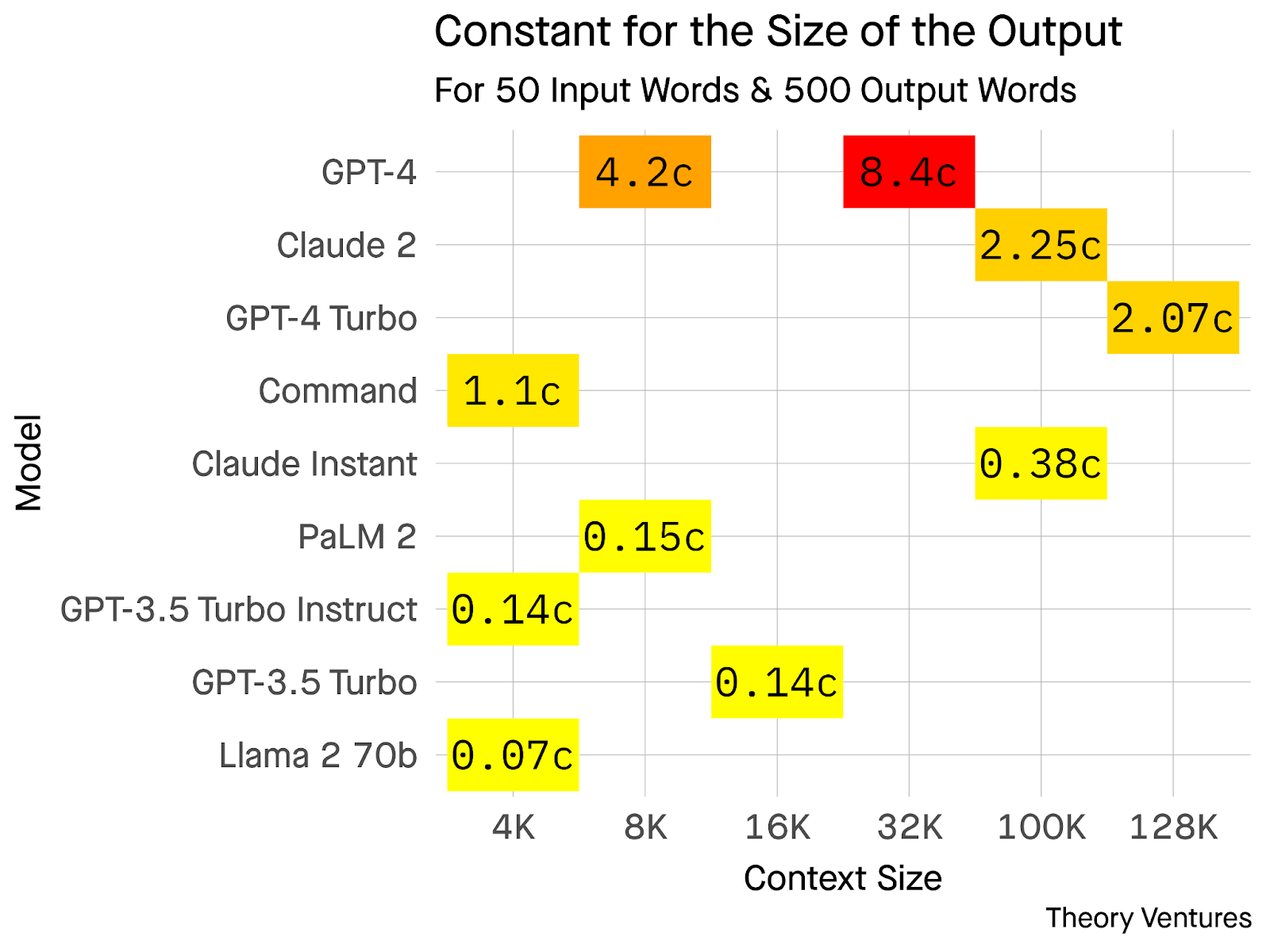

To put it in perspective, consider the per-query costs. According to Tom Tunguz, a typical AI query resulting in a few hundred words might cost anywhere from 0.03 cents up to 3.6 cents in compute, depending on the model used.

That may sound minuscule, but multiply it by millions of queries and it adds up fast. More complex requests or bigger context windows (longer prompts and answers) cost even more. Tunguz notes that generating a 500-word response with the latest GPT-4 can cost about $0.084 (8.4 cents).

Whereas using an open-source model like Llama 2 might cost ~$0.0007 (0.07 cents) for the same task. In other words, the fanciest proprietary model can be 120x more expensive per query than a more efficient open-source alternative. The costs vary widely by model and approach, but the bottom line is clear: serving AI involves a non-trivial variable cost for each use.

And while hardware and model optimizations are underway (OpenAI announced a 3x reduction in API costs in late 2023 as efficiency improved), the demand and expectations for quality are also rising.

So today, AI companies find themselves in a situation where costs don’t scale down easily with user growth. In fact, more usage directly means more expense, a stark contrast to the classic software model. It’s no surprise that this high cost-to-serve is sparking tough questions about gross margins and pricing.

All these infrastructure costs have a direct effect on profitability: they compress gross margins for AI products. Gross margin is essentially revenue minus the cost of goods sold (COGS), for software, COGS includes things like hosting and support. Traditional SaaS companies have enjoyed juicy gross margins in the range of 80-90%, because once the software is built, serving one more customer costs very little.

However, Generative AI flips that script.

Industry analyses show that AI-centric companies typically operate with lower gross margins, often in the 50-60% range. This margin is more akin to a services business than traditional software. For example:

Industry veteran Dave Kellogg summarized the shift well: “We’re moving from the movie business model (invest millions to create a product you can distribute for free) to something more akin to manufacturing, where we need to care about COGS.”

In the traditional “movie” model of software, the big upfront cost is product development, and afterward, selling copies generates pure profit. However, in AI, each unit produced comes with a material cost, more like manufacturing widgets in a factory. COGS now matter again, and every AI-powered feature carries an ongoing price tag that reduces the margins traditionally enjoyed by SaaS companies.

Before answering these questions, let’s explore some real-world examples to understand these economics in practice.

The abstract margin discussion comes to life (or death, for profits) when we examine real-world AI product pricing. Many companies have learned the hard way that if you price an AI service like a typical software subscription, you might lose money on each user due to the high usage costs. Here are a few illustrative examples:

Even pricey plans can be unprofitable. OpenAI initially offered ChatGPT for free and then introduced ChatGPT Plus at $20/month for faster, more reliable service. Even at $20, this was likely heavily subsidized or limited (indeed, GPT-4 usage for Plus users is metered). In late 2023, OpenAI rolled out a ChatGPT Pro tier at $200/month aimed at power users and professionals. One would assume $200/mo is plenty to cover costs, yet Sam Altman publicly admitted:

Why? Because some power users are so active that their queries cost more than $200 to serve. A minority of “AI super-users” reportedly hit 20,000+ queries a month, which at roughly $0.004-$0.01 of compute cost per query can exceed $200 in costs by themselves.

Moreover, OpenAI introduced new highly intensive features (like “Deep Research” mode, allowing very large or detailed queries) that cost a few dollars in compute per use; a user who maxes out the Deep Research allowance (100 runs/month) could burn hundreds of dollars of OpenAI’s GPU time.

In short, $200/month wasn’t enough to ensure profitability for the heaviest users. Most users won’t hit those extremes, but the plan as a whole is still unprofitable due to that subset of enthusiasts. Altman’s quip that “we’re losing money on Pro” underscores just how high the ceiling on usage can go when the only limit is the user’s imagination (and time).

A popular AI tool initially priced too low. GitHub Copilot, an AI pair-programmer that suggests code, launched for individual developers at $10 per month. It was a hit with developers, but it turns out this price was well below the cost needed to run the AI (which queries OpenAI’s Codex/GPT models under the hood). According to a report in The Wall Street Journal, Microsoft was losing on average >$20 per user per month on Copilot at that $10 price point. The compute cost to serve each user’s coding queries was roughly $30 per user-month on average, meaning a negative gross margin for the product. Some heavy users were even costing Microsoft as much as $80 in cloud compute monthly!

Essentially, every time a developer accepted a suggestion, Microsoft’s Azure bill was ticking upward. Microsoft initially absorbed these losses, a classic case of a tech company subsidizing usage to gain market adoption.

However, such a model isn’t sustainable long term. It’s no coincidence that Microsoft later announced pricier Copilot offerings (for example, Copilot for business at $19 per user with some feature upgrades, and an upcoming Copilot X with GPT-4 at even higher rates).

In fact, Microsoft’s strategy for its flagship Office 365 Copilot (which brings AI into Word, Excel, Outlook, etc.) has been to charge $30 per user per month on top of existing Office subscriptions. This steep add-on price reflects a lesson learned: AI features must be priced high enough to cover their substantial incremental costs. The days of $10 unlimited AI usage are numbered.

Enterprise-focused AI with eye on costs. Anthropic, maker of the Claude AI assistant, primarily sells via API usage and enterprise deals. They haven’t tried a low-cost mass consumer plan like OpenAI did, likely because they know each response has a real cost. Public info on Anthropic’s pricing shows it charges by the million characters or tokens, ensuring usage directly correlates to revenue (a necessity for their economics).

Despite that, as noted earlier, Anthropic’s gross margins remain around 50-55%, showing that even usage-based pricing doesn’t completely shield you from high COGS.

It just ensures you don’t give the service away at a flat rate.

Other AI services like Midjourney (AI image generation) have also experimented with pricing tiers and limits; Midjourney offers a basic plan around $10/month but limits the number of image generations, precisely because each image costs GPU time. These real examples reinforce a crucial point: Generative AI products often have more in common with utility-like usage pricing or costly services than with traditional unlimited SaaS plans.

To summarize these case studies, here’s a quick comparison of AI product pricing vs. cost:

Table: Examples of AI pricing vs. cost. Even relatively high subscription prices (ChatGPT Pro at $200) can be unprofitable if usage is extremely high, because each query has a real cost. By contrast, traditional SaaS enjoys much higher margins since serving each customer costs little.

Facing these economic pressures, the industry is rapidly adjusting. Product leaders and CFOs alike are asking: How do we deliver AI features without blowing up our unit economics? Several strategic responses have emerged to tackle the margin squeeze:

One obvious lever is to raise prices for AI capabilities or create higher tiers. We’ve seen this with Microsoft’s $30 Copilot add-on for Office and OpenAI’s $200 ChatGPT Pro plan. These higher price points aim to ensure the revenue covers the hefty compute costs. Google is doing similarly with its Workspace AI features (e.g., charging extra for Duet AI in Google Workspace).

The message: if you want the magic of generative AI, it won’t be as cheap as earlier software add-ons, it can’t be, if providers are to stay profitable. Multiple pricing tiers are being rolled out, often with usage limits, to make sure heavy users either pay more or accept throttling.

For instance, OpenAI’s ChatGPT Plus users have a cap on GPT-4 queries per 3 hours (to contain costs at the $20 level), and higher-paying users get higher limits. Charging more (or separately) for AI is quickly becoming standard practice for SaaS vendors.

Unlike traditional flat-rate SaaS, many AI offerings are adopting usage-based pricing models tied to consumption (e.g. per API call, per token, per image generated, etc.). This aligns revenue with cost more directly. OpenAI’s own API is a prime example, it charges developers per 1,000 tokens processed, so that OpenAI gets paid roughly in proportion to the compute expended.

If you’re a SaaS company adding an AI-powered feature, you might move from unlimited use to metered use or build in fair-usage policies.

Some enterprise software firms are experimenting with outcome-based pricing, essentially a form of usage pricing where the unit is a business outcome (for example, charging per sales meeting booked by an AI scheduling assistant, or per ticket resolved by an AI support bot). This is difficult to get right, but as Outreach CEO Manny Medina put it, “2025 is the end of 80% margins... You basically have two options:

In other words, if AI’s value can be tied to a measurable result for the customer, you might charge based on that result, ensuring you capture enough value to pay for the AI workload. Many companies are still in the early stages of such pricing experiments, but we’re likely to see more of it.

Another reaction is on the cost side of the equation, making delivering AI cheaper through technical means. There’s a growing focus on efficiency: using smaller or fine-tuned models, optimizing code, and choosing cheaper infrastructure.

This highlights that bigger models aren't always necessary. For many tasks, smaller or fine-tuned models running on cheaper infrastructure can be just as effective, and at a fraction of the cost.

For instance, OpenAI’s GPT-3.5 Turbo is cheaper and faster than GPT-4, with only slight trade-offs in quality for many applications. Similarly, open-source models like Llama 2 and Stable Diffusion are being adopted by companies looking to optimize costs, gain full control, and avoid paying per-call fees to third parties.

The goal is to "optimize for cost per unit of value." This can involve using a cascade of models: a fast, inexpensive model for simple tasks, and larger, more powerful models only for complex problems. Such optimizations help improve effective margins.

The largest tech players are vertically integrating to bend the cost curve. Microsoft, Google, and Amazon are all investing billions in AI infrastructure, whether that’s building custom AI chips (e.g. Google’s TPUs, Microsoft’s rumored AI chip “Athena”) or investing in AI startups to secure favorable cloud usage agreements.

In addition, these cloud providers have invested in AI model companies to lock in cloud business and potentially benefit from bulk discount efficiencies.

For smaller SaaS companies, a more accessible move includes:

All of these moves are focused on one key goal: reducing the cost per AI interaction over time. This leads to improved margins or the ability to offer more competitive pricing.

Some companies, particularly startups, are accepting lower margins in the short term as AI technology and pricing models mature. Thanks to venture funding, AI startups can survive with 50-60% gross margins (or even lower) in the near term. This strategy effectively subsidizes customers with investor money while hoping to reach scale or technical improvements that will eventually increase margins.

However, there are risks involved:

This is where Bill Gurley’s warnings come into play (more on that in the next section). The silver lining is that as AI becomes more ubiquitous, customers may become more willing to pay for it, especially if it delivers clear value. We may be in a transitional period where everyone expects AI features to be “free” add-ons, but that expectation is slowly shifting as the market educates itself.

The industry's response to these challenges is a mix of offensive and defensive tactics, ranging from pricing model changes to cost engineering. SaaS companies that once boasted 85% margins are now adjusting to 60-70% margins or even charging separately to return to 80% margins with a blended model. This period has sparked a grand experimentation in pricing, with options like:

The key trend here is a stronger alignment of price with value and cost than ever before.

The rise of AI has caught the attention of industry veterans and investors, with venture capitalist Bill Gurley particularly vocal about the economic challenges some AI startups face. His "negative gross margin" theory highlights the dangers of AI companies selling services for less than the cost to provide them. While unsustainable, this scenario is common in the fast-paced AI market.

Gurley explains that many AI startups are essentially reselling the services of major AI providers, rather than operating their own infrastructure. For example:

Gurley emphasizes that this is different from traditional software, where value-added resellers could mark up software with minimal cost. With AI, intermediaries face high costs of goods sold (COGS) from the models they’re reselling.

He also points out the risks of price wars in the AI space:

Gurley notes the absence of major acquisitions in the AI space, urging companies to become more aggressive in developing sustainable business models. Without the prospect of being acquired, AI startups must find ways to improve unit economics, such as:

In short, Gurley’s theory urges AI startups to be cautious:

The “negative gross margin” theory serves as a reality check for AI startups: Growth is important, but gross margins matter. Public markets care about profitability, and companies with persistently low margins receive lower valuations. Gurley also pointed out that investors are closely scrutinizing the margin profiles of AI startups, worried that high costs could affect long-term value. Even investors are concerned about this issue, and Gurley doesn’t want to see a repeat of past bubbles, where companies scaled revenue but spent more than they earned.

Takeaway for SaaS Pricing Leaders:

Simply ignoring this issue in favor of growth could be a serious mistake. Pricing needs to be carefully considered to ensure that the startup can scale profitably and avoid burning through cash.

The rise of AI in SaaS brings both opportunities and challenges. AI-powered features can add significant value for customers, but they also come with new costs that must be managed. Here are some strategic implications for pricing in the AI era:

This sounds obvious, but it’s a new mindset for many SaaS teams. When launching an AI-powered feature, you must account for the incremental cloud/compute cost in your pricing model. If your AI feature costs on average $5 per user to serve each month (in API calls or infrastructure), and you traditionally price your software at $30/user with 85% gross margin, you can’t just throw in the AI feature “for free” and still expect 85% margin. Either the price needs to go up, or the costs need to be offset.

Action point: model the per-user or per-action cost of your AI features and decide how that will be recouped. Some companies are packaging AI features into a higher tier (e.g., a “Pro” plan that costs more), while others use usage-based fees.

The key is to avoid unlimited usage plans that assume zero marginal cost, that assumption no longer holds. You may have to “get used to 60-70% margins” if you don’t adjust pricing, so decide if that’s an acceptable trade-off or not.

AI is pushing more companies toward usage-based pricing (UBP), charging by the amount the feature is used. This model can protect margins by making heavy users pay proportionally more. It also aligns with value delivered in many cases.

For example, if an AI sales tool sets 100 appointments for a customer, that likely generated far more value (and cost) than setting 10 appointments, so charging per appointment or per AI-call makes sense.

Outcome-based pricing is the holy grail form of this, tie price to a successful result (like a sale made or problem solved by the AI). Pricing should be aligned to customer value, but sustainable for the business. If charging by outcome is too complex, even simple tiered usage bands or overage charges can do the job.

Many API-based services have a blend: e.g., X amount of AI credits included, and then pay-as-you-go beyond that. Customers are generally amenable to usage pricing for AI, because it’s intuitive that more usage = more value (just be sure to communicate it well to avoid surprises). The end of flat, all-you-can-eat pricing for AI features is likely here.

Another approach we see is treating AI features as an add-on module that’s priced separately from the core software subscription. This is effectively what Microsoft did with Office 365 Copilot at $30, it’s positioned as a distinct premium add-on. This can be easier to justify to customers (“The base product is still X, but AI costs extra because it’s costly for us to provide”). It also lets you preserve the economics of your core offering while innovating on top.

One caution: the add-on price needs to reflect value, not just cost. It helps if you can quantifiably explain what the customer gets from the AI, e.g., time saved, output improved, etc. If the add-on is too expensive without clear ROI, customers might not bite.

But if you can show that, say, a $30 AI add-on saves a knowledge worker 5 hours a month, that’s usually a win-win. From a financial perspective, this separation ensures the AI part of your product has its own P&L accountability and doesn’t drag down the whole product’s margin.

Pricing and product leaders should work hand-in-hand with engineering to drive down the cost per AI interaction. Every reduction in COGS gives more pricing flexibility. For instance, if through model optimization you cut your inference cost by 50%, that could mean either improved margins or headroom to lower price (or a bit of both, depending on strategy). Stay on top of developments in AI model efficiency, smaller models, distillation techniques, caching frequent results, etc., can all lower costs.

Also, keep an eye on cloud pricing; if you’re at scale, negotiate committed use discounts for GPU instances, or consider cheaper providers. This operational focus is part of Monetizely’s pragmatic approach: pricing doesn’t exist in a vacuum. By coordinating with ops and finance, you can set pricing that reflects not just current costs but anticipated cost improvements (or regressions).

One tip is to periodically revisit your AI unit economics, the AI field is evolving fast, so assumptions from six months ago may be outdated. Make gross margin improvement a team KPI across product, engineering, and finance. The good news is that AI inference costs will come down over time as hardware and algorithms improve, so position yourself to capture those gains.

Strategically, it’s important to set customer expectations that AI features are not a free lunch. The more transparently you can communicate the value they’re getting and why it’s priced as such, the better.

Some companies even share that “this feature processes large amounts of data and thus incurs significant compute resources” in their marketing, subtly signaling that the pricing is justified. Educating customers on the outcomes (e.g., faster workflows, better results) will shift the conversation from cost-plus (“why does it cost $X?”) to value (“it helps you achieve Y, which is worth $X”). Nonetheless, especially for enterprise deals, expect savvy buyers to ask about pricing.

Be ready to articulate your pricing logic. If you have usage-based fees, ensure your customer success and sales teams can explain them and provide guidance so customers don’t feel ambushed by variable bills. In many cases, a hybrid pricing approach can work, e.g., a reasonable base fee (so you recoup some fixed costs) plus a variable fee for heavy use. This gives customers predictability with the option to pay more if they get more value.

The era of AI in SaaS might actually strengthen vendor-customer alignment, because both sides benefit from mindful usage (the vendor doesn’t want to waste cycles any more than the customer wants to waste time on pointless AI queries).

In summary, SaaS pricing leaders need to marry the art of value-based pricing with the science of cost management in the AI age. It’s a more complex equation now: price = value to customer, and price ≥ cost to serve (with healthy margin). The most effective strategies find this balance, ensuring AI features drive strong outcomes for users while boosting gross profit. Companies that achieve this will have the ability to continuously invest in AI innovation. Those who fail to strike this balance risk losing customer trust through inflated prices or damaging their own financial health by underpricing and subsidizing too much.

All these considerations lead to a big, strategic question: Can the current AI pricing models be sustained in the long term? The high cost of serving AI presents a challenge, but is it a temporary hurdle to be overcome with technological advancements, or will it remain a permanent feature of software economics?

Optimists point to dropping AI inference costs, with price reductions like OpenAI’s 2023 cuts, new chips such as Nvidia’s H100 and Google’s TPU v5, and ongoing research breakthroughs promising cheaper, faster AI. Sam Altman has even speculated that, eventually, AI might become “too cheap to meter,” suggesting a future where the cost of an AI query is negligible. If this happens, pricing pressure could ease, allowing AI-powered software to return to high gross margins (~80-90%). Companies are banking on this future, investing heavily now at low margins, with hopes of riding the cost curve down as scalability increases.

However, a drop in costs could come with a rise in demand. Historically, when something becomes cheaper, usage tends to increase exponentially. If AI becomes 10x cheaper, we may see 10x more queries as AI becomes integrated into every facet of work and life. Moreover, as AI models improve, they tend to become more complex and resource-intensive. The cutting-edge models (like GPT-5 or beyond) could remain expensive, maintaining the need for pricing discipline. This creates a potential cycle: better AI → more use → still significant costs → sustained pricing challenges.

Looking ahead, the future of AI pricing depends on two key paths:

As the market for AI features continues to evolve, it’s crucial for companies to strike a balance between pricing and value. The willingness to pay for AI is growing, but businesses must ensure that their pricing models remain sustainable. With new pricing paradigms likely to emerge, businesses must stay agile and embrace innovation in both AI capabilities and business models.

The key takeaway for SaaS pricing leaders is that they must innovate not just in AI capabilities but also in pricing strategies. The companies that succeed will be those that continuously ask, "Is our AI offering creating enough value to justify its cost?" and adjust their technology or pricing to maintain that balance.

In the end, the future of AI will depend on balancing its incredible capabilities with viable business economics. The companies that get this balance right will thrive, while others may become cautionary tales of the "lost money on every user but made it up in volume" variety.

Join companies like Zoom, DocuSign, and Twilio using our systematic pricing approach to increase revenue by 12-40% year-over-year.